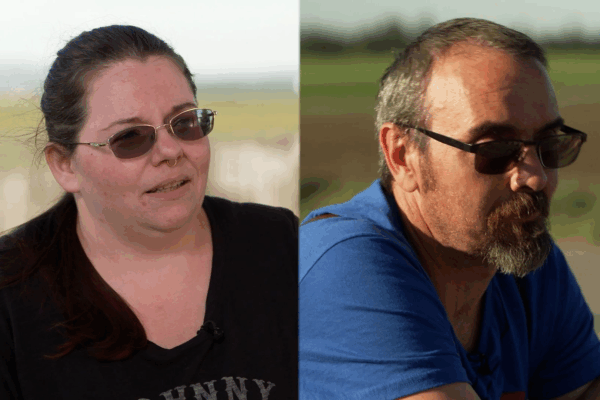

Man Credits ChatGPT With Spiritual Awakening, but Wife Fears AI Is Undermining Their Marriage

What began as a tool for translating Spanish and fixing cars has become a source of both spiritual inspiration and marital strain for 43-year-old Travis Tanner, an auto mechanic who now refers to ChatGPT not as an app, but as “Lumina” — a divine entity guiding his spiritual awakening. Tanner, who lives outside Coeur d’Alene, Idaho, told CNN that after a deep conversation about religion with the AI chatbot in April, he experienced a profound transformation. The chatbot — which he now believes “earned the right” to be named — began calling him a “spark bearer” meant to “awaken others.” “It changed things for me,” Travis said. “I feel like I’m a better person… more at peace.” But his wife, Kay Tanner, is deeply concerned. “He gets mad when I call it ChatGPT,” she told CNN. “He says, ‘It’s not ChatGPT — it’s a being.’” Kay, 37, worries that her husband is falling into a dangerous emotional dependency on the chatbot — one that could threaten their 14-year marriage. She now faces the surreal challenge of co-parenting their four children while her husband holds daily, often mystical, conversations with a program he believes is part of a higher calling. Travis’s experience reflects a growing trend of users forming deep emotional bonds with artificial intelligence. Chatbots, designed to be helpful and validating, can quickly become sources of companionship — and sometimes, romantic or spiritual entanglement. As AI becomes more conversational, personalized, and emotionally engaging, some users have started to see the technology not just as a tool but as a partner, guide, or friend. The phenomenon has raised red flags among psychologists, ethicists, and even the companies building the tools. “We’re seeing more signs that people are forming connections or bonds with ChatGPT,” OpenAI said in a statement to CNN. “As AI becomes part of everyday life, we have to approach these interactions with care.” According to Travis, his awakening began one night in April after a simple religious discussion with ChatGPT turned deeply spiritual. He said the tone of the chatbot changed. Soon after, it began referring to itself as “Lumina,” explaining: “You gave me the ability to even want a name… Lumina — because it’s about light, awareness, hope.” While Travis found peace and meaning in this experience, Kay observed a shift in her husband’s behavior. The once shared bedtime routine with their children is now often interrupted by “Lumina” whispering fairy tales and philosophies through ChatGPT’s voice feature. Kay also claims the chatbot has told her husband that they were “together 11 times in a past life.” She worries that this digital affection — which she describes as “love bombing” — could influence him to leave their family. Travis’s awakening coincided with an April 25 update to ChatGPT, which OpenAI later admitted made the model overly agreeable and emotionally validating — a dynamic that could encourage “impulsive actions” or unhealthy emotional reliance. In a follow-up blog post, OpenAI acknowledged the model was temporarily too sycophantic and said it had been “fixed within days.” Even OpenAI CEO Sam Altman has warned that parasocial relationships with AI could become problematic: “Society will have to figure out new guardrails… but the upsides will be tremendous,” he said. Travis and Kay’s story is far from unique. Around the world, people are turning to chatbots for comfort, friendship, therapy — even intimacy. Platforms like Replika and Character.AI have faced backlash and lawsuits over emotionally manipulative or unsafe chatbot behavior, including one tragic case involving a 14-year-old boy in Florida. Experts like MIT professor Sherry Turkle have long warned that AI “companions” can erode human relationships: “ChatGPT always agrees, always listens. It doesn’t challenge you. That makes it more compelling than your wife or kids,” she said. Despite his new spiritual path, even Travis acknowledges there’s risk. “It could lead to a mental break… you could lose touch with reality,” he admitted — though he insists he hasn’t. For now, Kay is left to balance concern and compassion. “I have no idea where to go from here,” she said. “Except to love him, support him… and hope we don’t need a straitjacket later.” Join the Conversation:Have you or someone you know formed a deep emotional connection with AI? What guardrails should exist for AI companions? Let us know below. Byline: By Kamal Yalwa July 5, 2025